About This Project

What is it?

This Fake News Detector is a machine learning tool built to automatically classify news content as real or fake using a fine-tuned BERT language model.

Why was it built

In the age of viral misinformation, detecting fake news is critical. This tool aims to help researchers, educators, and developers explore how NLP and AI can be used to fight misinformation using modern deep learning techniques.

Performance

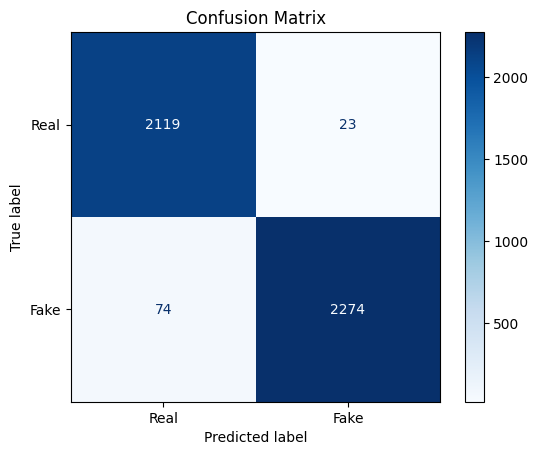

The model achieves around 97% accuracy on the test set, as supported by the confusion matrix:

- 2119 real news items were correctly predicted as real

- 2274 fake news items were correctly predicted as fake

- 23 real items were wrongly predicted as fake

- 74 fake items were wrongly predicted as real

This model is trained on dataset derived from Kaggle source – fake-and-real-news. Contains 23,502 fake news articles and 21,417 true news articles.

How It Works

Model: Fine-tuned BERT from Hugging Face Transformers

Input features: News statement, Title

Output:

- Binary Classification – Real or Fake

- SHAP waterfall plot – visually highlighting the most influential words that contributed to the model’s decision.

Training Method

The model is fine-tuned on a binary classification task using BERT-base-uncased from Hugging Face Transformers.

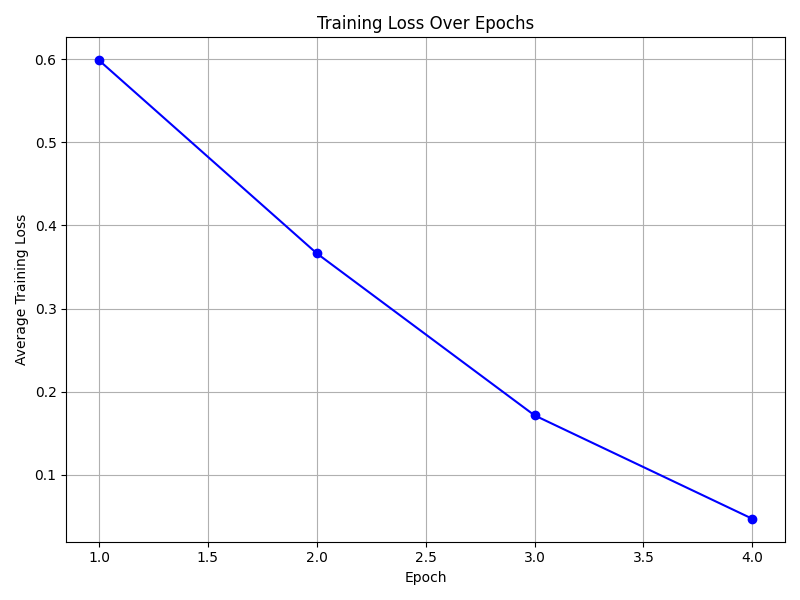

It was trained using CrossEntropy loss and the Adam optimizer for 4 epochs with a batch size of 32 and learning rate of 1e-5.

During training, the BERT encoder was partially frozen (only unfreezed the last two layers) to reduce overfitting and speed up convergence. Token inputs included the Title and partial statement (GPU power bottleneck with long input).

As the image above suggests, the training loss starts around 0.6 and lands near ~0.05 after 4 epochs.